Our networks need protocols to avoid transfer errors, so how does the quantum network handle this? Transfer errors are when network data is sent but not received, like when a catcher drops a ball from a pitcher. For example, if one point sends data to another when it is busy, the transfer is lost, just as a ball thrown when the catcher isn’t ready is dropped. Equally, if two points transfer to the same point at the same time, one is lost, just as if two pitchers throw two balls to a catcher at the same time, catching one means the other is lost.

But does it matter if a transfer is lost, as after all, it’s just information? Unfortunately, the money in your bank account is also just information, so a failed bank transfer could lose it all! If two people call you at once, it may not matter that one gets a busy signal, but on a network, losing a transfer loses what it represents. In a virtual world like Sim City, objects are represented by information, so a failed transfer could make the sword in your hand suddenly disappear, so networks can’t afford to lose transfers.

Our universe has, as far as we know, conserved energy for billions of years, so if the movement of every photon in our universe is a transfer, none have been lost, or we would notice it. If our world is virtual, the network generating it must avoid transfer errors, but how? Our networks avoid transfer errors by protocols like:

1. Locking. Makes a receiver exclusively available before sending the transfer.

2. Synchrony. Synchronizes all transfers to a common clock.

3. Buffers. Stores transfer overloads in a buffer memory.

Could a quantum network use any of these methods?

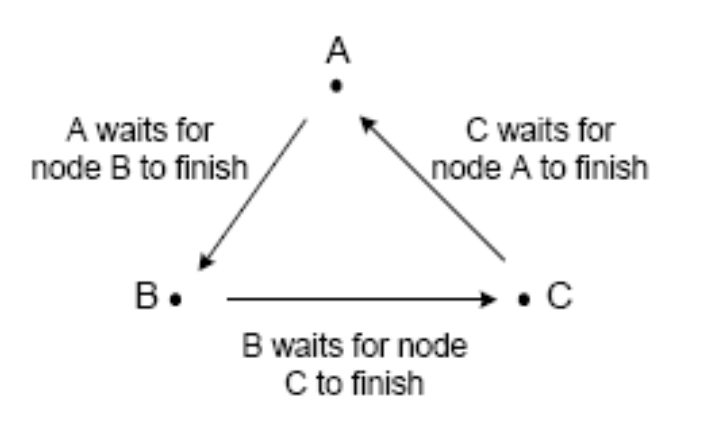

Locking. Locking makes a receiver dedicate itself to a sender before the transfer is sent. For example, if I edit this document, my laptop locks it exclusively, so any other edit attempt fails, with a message that it is in use. Otherwise, if the same document is edited twice, the last save will overwrite the changes of the first, which are lost. Locking avoids this by making every transfer two steps not one, but also allows transfer deadlock (Figure 2.13), where point A is waiting to confirm a lock from B, that is waiting for a lock from C, that is waiting for a lock from A, so they all wait forever. If the quantum network used locking, we would occasionally find dead areas of space unavailable for use, but we never have, so it can’t use locking to avoid transfer losses.

Synchrony. On a computer motherboard, when a fast central processing unit (CPU) sends data to a slower memory register, it must wait before sending another transfer. If it sends data again too soon, the transfer fails because the register is still busy with the first transfer. On the other hand, if it waits too long, this wastes valuable CPU cycles. It can’t check if the memory is free before sending because that is a new command that also needs checking! Using the double-send of locking would slow the motherboard down, so it uses a common clock to synchronize events. The CPU sends data to memory when the clock ticks, then sends more data when it ticks again. This avoids transfer losses if the clock is set to the speed of the slowest component, plus some slack. One can increase a motherboard’s clock rate, to make it run faster, but too much over-clocking will crash it. Synchrony requires a common time but according to relativity, our universe doesn’t have that. If the quantum network had a central clock, it would cycle at the rate of its slowest part, say a black hole, which is massively inefficient, so it can’t use synchrony to avoid transfer losses either.

Buffers. The Internet uses memory buffers to avoid transfer errors so it can be decentralized. Protocols like Ethernet (Note 1) distribute control, to let each point run at its own rate, and buffers handle the overloads. If a point is busy when a transfer arrives, a buffer stores it to be processed later. Buffers let fast devices work with slow ones. If a laptop sends a document to a printer, it goes to a buffer that feeds the printer in slow time, so you can carry on working while the document prints. But buffers require planning, as too big buffers waste memory and too small buffers can overload. Internet buffers are matched to load, so backbone servers like New York have big buffers, but backwaters like New Zealand have small ones. In our universe, a star is like a big city while empty space is a backwater. If the quantum network used buffers, where stars occur would have to be predictable, which according to quantum theory, wasn’t so. Equally, same buffers in the vastness of space would be a massive waste of memory, so it can’t use buffers to avoid transfer losses either.

Our networks avoid transfer losses by locking, synchrony, or buffers, but quantum events proceed one step at a time so the quantum network can’t use locking, our universe has no common time so it can’t use synchrony, and the quantum network has no memory storage so it can’t use buffers. The quantum network can’t wait for locks or clocks, and has no buffer memory, so how can it avoid transfer errors?

Note 1 . Or CSMA/CD – Carrier Sense Multiple Access/ Collision Detect. In this decentralized protocol, multiple clients access the network carrier if they sense no activity but withdraw gracefully if they detect a collision.