Ackerman, M. S. (2000). “The intellectual challenge of CSCW: The gap between social requirements and technical feasibility,” Human Computer Interaction, 15, pp. 179–203.

Beer, D., & Burrows, R. (2007) “Sociology and, of and in Web 2.0: Some Initial Considerations.” Sociological Research Online, 12(5).

Berners-Lee, T. (2000). Weaving The Web: The original design and ultimate destiny of the world wide web. New York: Harper-Collins.

Boutin, P. (2004) “Can e-mail be saved?,” Infoworld, 14, April 19, pp. 40–53.

Burk, D. L. (2001). “Copyrightable functions and patentable speech.” Communications of the ACM, 44, (2), pp. 69–75.

Callahan, D. (2004). The Cheating Culture. Orlando: Harcourt.

Eigen, P. (2003) Transparency International Corruption Perceptions Index 2003, Transparency International.

Foreman, B., & Whitworth, B. (2005). Information Disclosure and the Online Customer Relationship. In Proceedings of the Quality, Values and Choice Workshop, Computer Human Interaction, 2005, (pp. 1–7). Portland, Oregon.

Hoffman, L. R., & Maier, N. R. F. (1961). “Quality and acceptance of problem solutions by members of homogenous and heterogenous groups.” Journal of Abnormal and Social Psychology, 62, pp. 401–407.

Johnson, D. G. (2001). Computer Ethics. Upper Saddle River, New Jersey: Prentice-Hall.

Lessig, L. (1999). Code and other laws of cyberspace. New York: Basic Books.

Messaging Anti-Abuse Working Group (MAAWG). (2006). Email Metrics Program, First Quarter 2006 Report, retrieved April 1, 2007. For 2012.

Mandelbaum, M. (2002). The Ideas That Conquered the World. New York: Public Affairs.

MessageLabs, (2006). The year spam raised its game; 2007 predictions, Access Date-2006, 2006.

MessageLabs, (2010) Intelligence Annual Security Report, 2010, Access Date 2010.

Meyrowitz, J. (1985). No Sense of Place: The impact of electronic media on social behavior. New York: Oxford University Press.

Mitchell, W. J. (1995). City of Bits Space, Place and the Infobahn. Cambridge, MA: MIT Press.

Porra, J., & Hirscheim, R. (2007). “A lifetime of theory and action on the ethical use of computers. A dialogue with Enid Mumford,” JAIS, 8, (9), pp. 467–478.

Poundstone, W. (1992). Prisoner’s Dilemma. New York: Doubleday, Anchor.

Reid, F. J. M., Malinek, V., Stott, C. J. T & Evans J. S. B. T. (1996). “The messaging threshold in computer-mediated communication,” Ergonomics, 39, (8) pp. 1017–1037.

Ridley, M. (2010). The Rational Optimist: How Prosperity Evolves. New York: Harper.

Robert, H. M. (1993). The New Robert’s Rules of Order. New York: Barnes & Noble.

Samuelson, P. (2003). “Unsolicited Communications as Trespass.” Communications of the ACM, 46, (10) pp. 15–20.

Shirky, C. (2008). Here Comes Everybody: The Power of Organizing Without Organizations. London: Penguin.

Short, J., Williams, E., & Christie, B. (1976) “Visual communication and social interaction – The role of ‘medium’ in the communication process.” In J.A. Short, E. Williams, & B. Christie (Eds), The Social Psychology of Telecommunications, (pp. 43-60). New York: Wiley.

Spence, R. & Apperley, M. (2011): “Bifocal Display.” In Mads Soegaard & RikkeFriis Dam (Eds.). Encyclopedia of Human-Computer Interaction. Aarhus, Denmark: The Interaction-Design.org Foundation. http://www.interaction-design.org/encyclopedia/bifocal_display.html

Weiss, A. (2003). “Ending spam’s free ride,” netWorker, 7 (2), pp. 18–24.

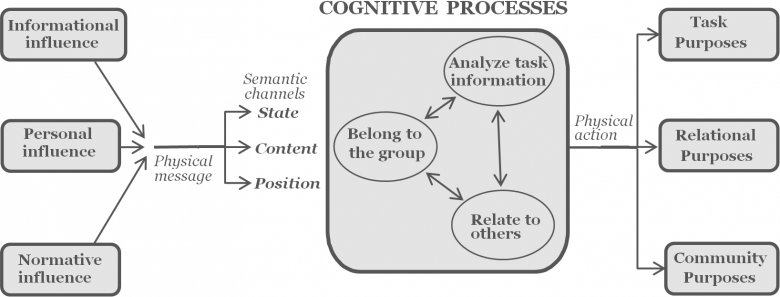

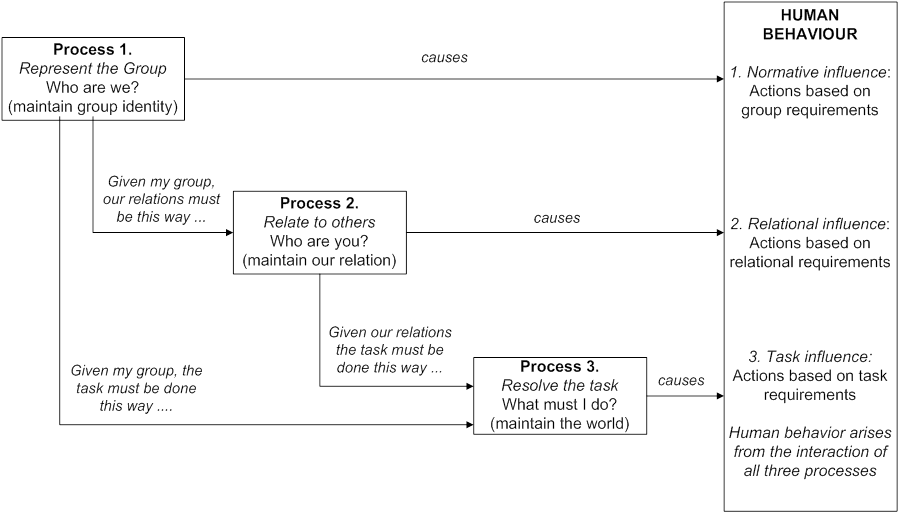

Whitworth, B., Gallupe, R. B. & McQueen R. (2000). “A cognitive three-process model of computer-mediated group interaction.” Group Decision and Negotiation 9(5):pp. 431-456.

Whitworth, B., Van de Walle, B., & Turoff, M. (2000). Beyond rational decision making. In proceedings of Group Decision and Negotiation 2000 Conference, (pp. 1-13), Glasgow, Scotland.

Whitworth, B., Gallupe, B., & McQueen, R. (2001). “Generating agreement in computer-mediated groups.” Small Group Research, 32, (5), pp. 621–661.

Whitworth, B., & deMoor, A. (2003). “Legitimate by design: Towards trusted virtual community environments.” Behaviour & Information Technology, 22 (1), pp. 31–51.

Whitworth, B. &. Whitworth, E. (2004). “Spam and the social-technical gap” IEEE Computer, 37 (10), pp. 38–45.

Whitworth, B. & Liu, T. (2009). “Channel email: Evaluating social communication efficiency,” IEEE Computer, 42 (7), pp. 63-72.

Whitworth, B. & Whitworth, A. (2010). “The Social Environment Model: Small Heroes and the Evolution of Human Society,” First Monday, 15 (11).

Wright, R. (2001). Nonzero: The logic of human destiny. New York: Vintage Books.