Table 3.1 below categorizes various communication media by message richness and linkage with electronic media in italics, e.g. a phone call is an inter-personal audio but a letter is interpersonal text. A book is a document broadcast, radio is broadcast audio and TV is broadcast video. The Internet can broadcast documents (web sites), audio (podcasts) or videos (YouTube). Email allows one-way interpersonal text messages, while Skype adds two-way audio and video. Chat is few-to-few matrix text communication, as is instant messaging but with known people. Blogs are text broadcasts that also allow comment feedback. Online voting is matrix communication as many communicate with many in one operation.

|

Linkage |

|||

|

Richness |

Broadcast |

Interpersonal |

Matrix |

|

Position |

Footprint,Flare,Scoreboard,Scream, |

Posture,Gesture,Nodding,Salute,Smiley |

Show of hands, Applause,An election,Web counter, Karma system, Tag cloud, Online vote, Reputation system, Social bookmarks |

|

Document |

Poster, Book,Web site, Blog,Online photo, News feed,Online review,Instagram,Twitter (text) |

Letter,Note,Email, Texting, Instant messageSocial network |

Chat, Twitter, Wiki, E-market, Bulletin board,Comment system,Advice board,Social network |

|

Audio |

Radio,Loud-speaker,Record, CD, Podcast, Online music |

Telephone,Answer-phone,Cell phone,Skype |

Choir,Radio talk-back,Conference call,Skype conference call |

|

Multi-media (Video) |

Speech, Show,Television, Movie, DVD,YouTube |

Face-to-face conversation,Chatroulette, Video-phone,Skype video |

Face-to-face meeting, Cocktail party,Video-conference, MMORPGSimulated world |

Table 3.1: Communication media by richness and linkage

Note that Twitter is both broadcast (text) and matrix (when people “follow” others), and that social networks are also both interpersonal and matrix communication.

Computers are said to allow anytime, anywhere communication, but while asynchronous email communication lets senders ignore distance and time,synchronous communication like Skype does not, as one cannot call someone who is sleeping. The world is flat as Friedmann says but the day is still round. The main contribution of technology is allowing communication for less effort, e.g. an email is easier to send than posting a letter. Lowering the message threshold means that more messages are sent (Reid et al., 1996). Email stores a message until the receiver can view it, but a face-to-face message is ephemeral; it disappears if you are not there to get it. Yet being unable to edit a message sent makes sender state streams like tone of voice more genuine.

Media richness theory framed communication in media richness terms, so electronic communication was expected to move directly to video, but that was not what happened. EBay’s reputations, Amazon’s book ratings, Slashdot’s karma, tag clouds, social bookmarks and Twitter are not rich at all. Table 3.1 shows that computer communication evolved by linkage as well as richness. Computer chat, blogs, messaging, tags, karma, reputations and wikis are all high linkage but low richness.

Communication that combines high richness and high linkage is interface expensive, e.g. a face-to-face meeting lets rich channels and matrix communication give factual, sender state and group state information. The medium also lets real time contentions between people talking at once be resolved naturally. Everyone can see who the others choose to look at, so whoever gets the group focus continues to speak. To do the same online would require not only many video streams on every screen but also a mechanism to manage media conflicts. Who controls the interface? If each person controls his or her own view there is no commonality, while if one person controls the view like a film editor there is no group action. Can electronic groups act democratically like face-to-face do?

In audio-based tagging, the person speaking automatically makes his or her video central (Figure 3.6). The interface is common but it is group-directed, i.e. democratic. Gaze-based tagging is the same except that when people look at a person, that person’s window expands on everyone’s screen. It is in effect a group directed bifocal display (Spence and Apperley, 2012). When matrix communication is combined with media richness, online meetings will start to match face-to-face meetings in terms of communication performance.

Figure 3.6:Audio based video tagging

A social model of communication suggests why video-phones are technically viable today but video-phoning is still not the norm. Consider its disadvantages, like having to shave or put on lipstick to call a friend or having to clean up the background before calling Mum. Some even prefer text to video because it is less rich, as they do not want a big conversation.

In sum, computer communication is about linkage as well as richness because communication acts involve senders and receivers as well as messages. Communication also varies by system level, as is now discussed.

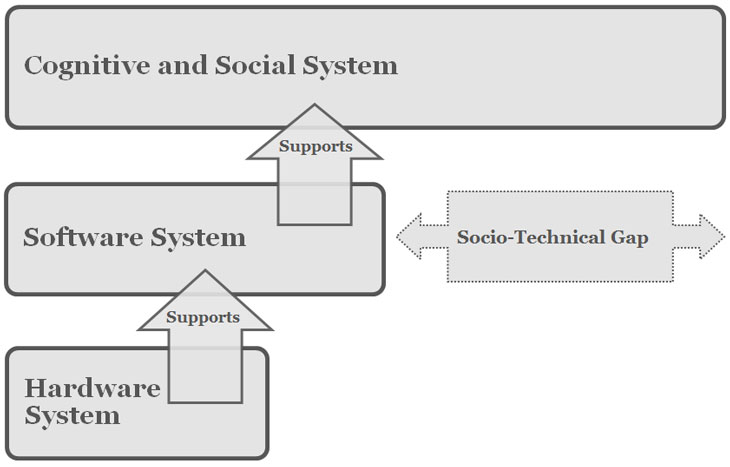

The term socio-technical

The term socio-technical