Research questions from the list below and give your answer, with reasons and examples. If you are reading this chapter as part of a class – either at a university or in a commercial course – work in pairs then report back to the class.

Chapter 2. Discussion Questions

Research questions from the list below and give your answer, with reasons and examples. If you are reading this chapter as part of a class – either at a university or in a commercial course – work in pairs then report back to the class.

1) What three widespread computing expectations did not happen? Why not? What three unexpected computing outcomes did happen? Why?

2) What is a system requirement? How does it relate to system design? How do system requirements relate to performance? Or to system evaluation criteria? How can one specify or measure system performance if there are many factors?

3) What is the basic idea of general systems theory? Why is it useful? Can a cell, your body, and the earth all be considered systems? Describe Lovelock’s Gaia Hypothesis. How does it link to both General Systems Theory and the recent film Avatar? Is every system contained within another system (environment)?

4) Does nature have a best species?Note that success in biology is what survives. Since over 99% of all species that ever existed are now extinct, every species existing today is a great success, and bacteria and viruses are as “evolved” as us. If nature has no better or worse, how can species evolve to be better? Or if it has a better and worse, why is current life so varied instead of being just the “best”?

5) Does computing have a best system? If it has no better or worse, how can it evolve? If it has a better and worse, why is current computing so varied? Which animal actually is “the best”?

6) Why did the electronic office increase paper use? Give two good reasons to print an email in an organization. How often do you print an email? When will the use of paper stop increasing?

7) Why was social gaming not predicted? Why are MMORPG human opponents better than computer ones? What condition must an online game satisfy for a community to “mod” it (add scenarios)?

8) In what way is computing an “elephant”? Why can it not be put into an academic “pigeon hole”? How can science handle cross-discipline topics?

9) What is the first step of system design? What are those who define what a system should do called? Why can designers not satisfy every need? Give examples from house design.

10) Is reliability an aspect of security or is security an aspect of reliability? Can both these things be true? What are reliability and security both aspects of? What decides which is more important?

11) What is a design space? What is the efficient frontier of a design space? What is a design innovation? Give examples (not a vacuum cleaner).

12) Why did the SGML academic community find Tim Berners-Lee’s WWW proposal of low quality? Why did they not see the performance potential? Why did Microsoft also find it “of no business value”? How did the WWW eventually become a success? Given that business and academia now use it extensively, why did they reject it initially? What have they learnt from this lesson?

13) Are NFRs like security different from functional requirements? By what logic are they less important? By what logic are they equally critical to performance?

14) In general systems theory (GST), every system has what two aspects? Why does decomposing a system into simple parts not fully explain it? What is left out? Define holism. Why are highly holistic systems also individualistic? What is the Frankenstein effect? Show a “Frankenstein” web site. What is the opposite effect? Why does sticking “good” components together not always produce a good system?

15) What are the elemental parts of a system? What are its constituent parts? Can elemental parts be constituent parts? What connects elemental and constituent parts? Give examples.

16) Why are constituent part specializations important in advanced systems? Why do we specialize as left-handers or right-handers? What about the ambidextrous?

17) If a car is a system, what are its boundary, structure, effector and receptor constituents? Explain its general system requirements, with examples. When might a vehicle’s “privacy” be a critical success factor? What about its connectivity?

18) Give the general system requirements for browser application. How did its designers meet them? Give three examples of browser requirement tensions. How are they met?

19) How do mobile phones meet the general system requirements, first as hardware and then as software?

20) Give examples of usability requirements for hardware, software and HCI. Why does the requirement change by level? What is “usability” on a community level?

21) Are reliability and security really distinct? Can a system be reliable but insecure, unreliable but secure, unreliable and insecure, or reliable and secure? Give examples. Can a system be functional but not usable, not functional but usable, not functional or usable, or both functional and usable? Give examples.

22) Performance is taking opportunities and avoiding risks. Yet while mistakes and successes are evident, missed opportunities and mistakes avoided are not. Explain how a business can fail by missing an opportunity, with WordPerfect vs Word as an example. Explain how a business can succeed by avoiding risks, with air travel as an example. What happens if you only maximize opportunity? What happens if you only minimize risk? Give examples. How does nature both take opportunities and avoid risks? Should designers do this too?

23) Describe the opportunity enhancing general system performance requirements, with an IT example of each. When would you give them priority? Describe the risk reducing performance requirements, with an IT example of each. When would you give them priority?

24) What is the Version 2 paradox? Give an example from your experience, of software that got worse on an update. You can use a game example. Why does this happen? How can designers avoid this?

25) Define extendibility for any system. Give examples for a desktop computer, a laptop computer and a mobile device. Give examples of software extendibility, for email, word processing and game applications. What is personal extendibility? Or community extendibility?

26) Why is innovation so hard for advanced systems? Why stops a system being secure and open? Or powerful and usable? Or reliable and flexible? Or connected and private? How can such diverse requirements ever be reconciled?

27) Give two good reasons to have specialists in a large computer project team. What happens if they disagree? Why are cross-disciplinary integrators also needed?

2.10 Project Development

The days when programmers could just list a system’s functions and then code them are gone, if they ever existed. Today, design involves not only many specialties but also their interaction.

A large system development project could involve up to eight specialist groups, with distinct requirements, analysis and testing (Table 2.4). Note that Chapter 3 explains legitimacy analysis.

Smaller projects might break down into four groups:

- Actions: Functionality and usability.

- Interactions: Security and extendibility.

- Adapting: Reliability and flexibility

- Interchanges: Connectivity and privacy

Even smaller projects might only have two teams, one for opportunities and one for risks, while obviously a one-person project will have just one goal, of performance.

Design tensions can be reduced by agile methods where specialists talk more to each other and stakeholders, but advanced system development also needs innovators who can cut across specialist boundaries to resolve design tensions. This requires people who are trained in more than one discipline.

|

Requirement |

Code |

Analysis |

Testing |

|

Functionality |

Application |

Task |

Business |

|

Usability |

Interface |

Usability |

User |

|

Security |

Access control |

Threat |

Penetration |

|

Extendibility |

Plug-ins |

Standards |

Compatibility |

|

Reliability |

Error recovery |

Stress |

Load |

|

Flexibility |

Preferences |

Contingency |

Situation |

|

Connectivity |

Network |

Channel |

Communication |

|

Privacy |

Rights |

Legitimacy |

Community |

Table 2.4: A breakdown of project analysis and testing

2.9 Design Tensions and Innovation

A design tension is when making one design requirement better makes another worse. Putting two different demands on the same constituent often gives a design tension, e.g. when castle walls that protect against attacks have to have a gate to get supplies in, that entrance reduces security. Likewise computers that aim to deny virus attacks may still need plug-in software hooks. These contrasts are not anomalies but built into the nature of systems.

Early in its development, a product can be easily improved in many ways so there are few design tensions, but as requirements are met the performance area increases, i.e. the system gets better. This improvement increases design tensions – one can imagine the lines between the requirements tighten like rubber bands stretched as the web of performance gets bigger. In developed systems, the tension is so “tight” that improving any one performance criteria will pull back one or more others. This gives the Version 2 Paradox, where designers spend years “improving” an already successful product to give a Version 2 that actually performs worse!

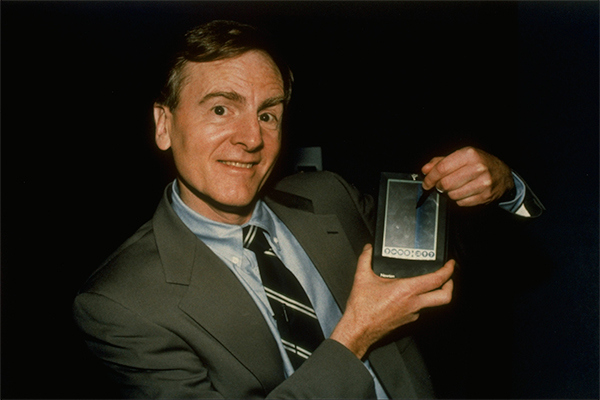

To improve a complex system one cannot just improve one criteria, i.e. just pull one corner of its performance web. For example, in 1992 Apple CEO Sculley introduced the hand-held Newton, claiming that portable computing was the future (Figure 2.4). We now know he was right, but in 1998 Apple dropped the line due to poor sales. The Newton’s small screen made data entry hard, i.e. the portability gain was nullified by a usability loss. Only when Palm’s Graffiti language improved handwriting recognition did the personal digital assistant (PDA) market revive. Sculley’s portability innovation was only half the design answer — the other half was resolving the usability problems that the improvement had created. Innovative design requires cross-disciplinary generalists to resolve such design tensions.

In general system design, too much focus on any one criterion gives diminishing returns, whether it is functionality, security (OECD, 1996), extendibility (De Simone & Kazman, 1995), privacy (Regan, 1995), usability (Gediga et al., 1999) or flexibility (Knoll & Jarvenpaa, 1994). Improving one aspect alone of a performance web can even reduce performance, i.e. “bite back” (Tenner, 1997), e.g. a network that is so secure no-one uses it. Advanced system performance requires balance, not just one dimensional design “excellence”.

2.8 The Web of System Performance

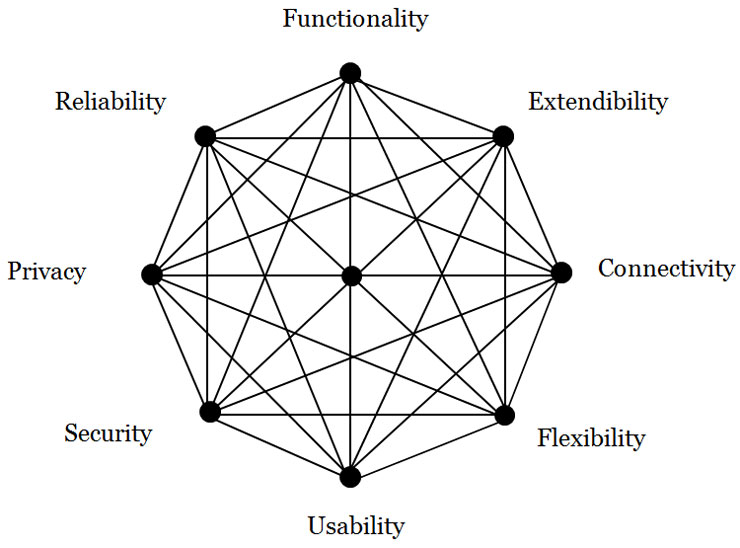

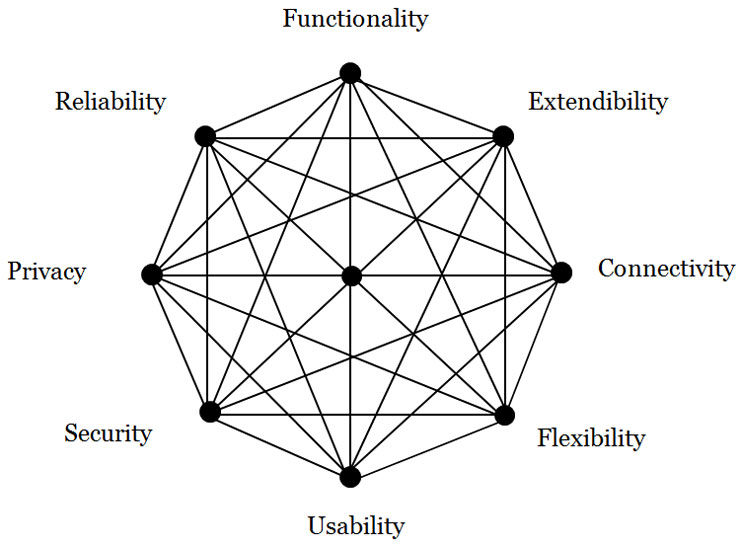

Figure 2.3 shows the web of system performance (WOSP), which is a general system design space where the:

- Area reflects the overall system performance.

- Shape reflects the requirement weights.

- Lines reflect the requirement tensions.

In performance, taking opportunities is as important as reducing risk (Pinto, 2002). So the WOSP space has four active requirements that are about taking opportunities and four passive ones that are about reducing risks. The active requirements are: functionality, flexibility, extendibility, connectivity. The passive requirements are: security, reliability, privacy, usability.

The weightings of each requirement vary with the environment, e.g. security is more important in threat scenarios and extendibility more important in opportunity situations.

The requirement criteria of Figure 2.3 are logically modular, so they have no inherent contradictions, e.g. a bullet-proof plexi-glass room can be secure but not private, while encrypted files can be private but not secure. Reliability provides services while security denies them (Jonsson, 1998), so a system can be reliable but insecure, unreliable but secure, unreliable and insecure or reliable and secure. Likewise, functionality need not deny usability (Borenstein & Thyberg, 1991) or connectivity privacy. Cross-cutting requirements (Moreira et al., 2002) can be reconciled by innovative design.

2.7 Requirements Engineering

Requirement engineering aims to define a system’s purposes but if its main constituents specialize, then how can the purpose of the whole system be defined? The approach taken here is to specify the requirements for each constituent separately then leave how these requirements are reconciled in a specific environment to the art of system design.

In general, a system performs by interacting with its environment to gain value and avoid loss. In Darwinian terms, what does not survive dies out and what does lives on. Any system in this situation needs a boundary to separate it from the world, a structure to support its existence, effectors to act upon the environment around it, and receptors to monitor the world for risks and opportunities (see Table 2.2). The requirement to reproduce is here ignored as it is not relevant to computing and adds a time dimension to the model.

|

Constituent |

Requirement |

Definition |

|

Boundary |

Security |

To deny unauthorized entry, misuse or takeover by other entities. |

|

|

Extendibility |

To attach to or use outside elements as system extensions. |

|

Structure |

Flexibility |

To adapt system operation to new environments |

|

|

Reliability |

To continue operating despite system part failure |

|

Effector |

Functionality |

To produce a desired change on the environment |

|

|

Usability |

To minimize the resource costs of action |

|

Receptor |

Connectivity |

To open and use communication channels |

|

|

Privacy |

To limit the release of self-information by any channel |

Table 2.2: General system constituents and requirements

For example, cells first evolved a boundary membrane, then organelle and nuclear structures for support and control; then eukaryotic cells evolved flagella to move, and finally protozoa got photo-receptors (Alberts et al., 1994). We also have a skin boundary, internal structures for metabolism and control, muscle effectors and sense receptors. Computers also have a case boundary, a motherboard internal structure, printer or screen effectors and keyboard or mouse receptors.

Four main constituents, each with risk and opportunity, gives eight general performance requirements as follows:

a) Boundary constituents manage the system boundary. They can be designed to deny outside things entry (security) or to use them (extendibility). In computing, virus protection is security and system add-ons are extendibility (Figure 2.2). In people, the immune system gives biological security and tool-use illustrates extendibility.

b) Structure constituents manage internal operations. They can be designed to limit internal change to reduce faults (reliability), or to allow internal change to adapt to outside changes (flexibility). In computing, reliability reduces and recovers from error, and flexibility is the system preferences that allow customization. In people, reliability is the body fixing a cell “error” that might cause cancer, while the brain learning illustrates flexibility.

c) Effector constituents manage environment actions, so can be designed to maximize effects (functionality) or minimize resource use (usability). In computing, functionality is the menu functions, while usability is how easy they are to use. In people, functionality gives muscle effectiveness, and usability is metabolic efficiency.

d) Receptor constituents manage signals to and from the environment, so can be designed to open communication channels (connectivity) or close them (privacy). Connected computing can download updates or chat online, while privacy is the power to disconnect or log off. In people, connectivity is conversing, and privacy is the legal right to be left alone. In nature, privacy is camouflage, and the military calls it stealth. Note that privacy is the ownership of self-data, not secrecy. It includes the right to make personal data public.

These eight general requirements map well to current requirements engineering terms (Table 2.3).

|

Requirement |

Requirements Engineering Synonyms |

|

Functionality |

Effectualness, capability, usefulness, effectiveness, power, utility. |

|

Usability |

Ease of use, simplicity, user friendliness, efficiency, accessibility. |

|

Extendibility |

Openness, interoperability, permeability, compatibility, standards. |

|

Security |

Defense, protection, safety, threat resistance, integrity, inviolable. |

|

Flexibility |

Adaptability, portability, customizability, plasticity, agility, modifiability. |

|

Reliability |

Stability, dependability, robustness, ruggedness, durability, availability. |

|

Connectivity |

Networkability, communicability, interactivity, sociability. |

|

Privacy |

Tempest proof, confidentiality, secrecy, camouflage, stealth, encryption. |

Table 2.3: System requirement synonyms

Note that as what is exchanged changes by system level, so do the names preferred:

a) Hardware systems exchange energy. So for them functionality is often called power, e.g. hardware with a high CPU cycle rate. Usable hardware uses less power for the same result, e.g. mobile phones that last longer. Reliable hardware is rugged enough to work if you drop it, and flexible hardware is mobile enough to work if you move around, i.e. change environments. Secure hardware blocks physical theft, e.g. by laptop cable locks, and extendible hardware has ports for peripherals to be attached. Connected hardware has wired or wireless links and private hardware is tempest proof i.e. it does not physically leak energy.

b) Software systems exchange information. Functional software has many ways to process information, while usable software uses less CPU processing (“lite” apps). Reliable software avoids errors or recovers from them quickly. Flexible software is operating system platform independent. Secure software cannot be corrupted or overwritten. Extendible software can access OS program library calls. Connected software has protocol “handshakes” to open read/write channels. Private software can encrypt information so that others cannot see it.

c) HCI systems exchange meaning, including ideas, feelings and intents. In functional HCI the human computer pair is effectual, i.e. meets the user task goal. Usable HCI requires less intellectual, affective or conative effort, i.e. is intuitive. Note that cognitions divide into intellectual thoughts, affective emotions, and conative will. Reliable HCI avoids or recovers from unintended user errors by checks or undo choices — the web Back button is an HCI invention. Flexible HCI lets users change language, font size or privacy preferences, as each person is a new environment to the software. Secure HCI avoids identity theft by user password. Extendible HCI lets users use what others create, e.g. mash-ups and third–party add-ons. Connected HCI communicates with others, while privacy includes not getting spammed or being located on a mobile device. Each level applies the same ideas to a different system view. The community level is discussed in Chapter 3.

2.6 Constituent Parts

In general, what are the parts of a system? The answer is not always obvious, as the “parts” of a program could be lines of code, variables or sub-programs. A system’s elemental parts are those not formed of other parts, e.g. a mechanic stripping a car stops at the bolt as an elemental part of that level. To decompose it further gives atoms which are physical not mechanical elements. Each level has a different elemental part: physics has quantum waves, information has bits, psychology has qualia and society has citizens (Table 2.1). Note that a qualia is a basic subjective experience, like the pain of a headache. Elemental parts then form into more complex parts, as for example bits form bytes.

|

Level |

Element |

Other parts |

|

Community |

Citizen |

Friendships, groups, organizations, societies. |

|

Personal |

Qualia |

Cognitions, attitudes, beliefs, feelings, theories. |

|

Informational |

Bit |

Bytes, records, files, commands, databases. |

|

Physical |

Quantum waves? |

Quarks, electrons, nucleons, atoms, molecules. |

Table 2.1:System elements by level

A system’s constituent parts are those that interact to form the system but are not part of other parts (Esfeld, 1998), e.g. the body frame of a car is a constituent part because it is part of the car but not part of any other car part. So, dismantling a car entirely gives elemental parts, not constituent parts, e.g. a bolt on a wheel is not a constituent part if it is part of a wheel. To understand a system one must identify its constituent parts not only its elemental parts.

How elemental parts give constituent parts is the system structure, e.g. to say a body is composed of cells ignores its constituent structure: how parts combine into higher parts or sub-systems. Only in system heaps, like a pile of sand, are elemental parts also constituent parts. An advanced system like the body is not a heap because the cell elements combine to form sub-systems just as the elemental parts of a car do, e.g. a wheel as a car constituent has many sub-parts. Just sticking together arbitrary constituents in design without regard to their interaction has been called the Frankenstein effect (Tenner, 1997), as Dr. Frankenstein made a human being by putting together the best of each individual body part he could find in the graveyard – the result was a monster. The body’s constituent parts are for example, the digestive system, the respiratory system, etc., not the head, torso and limbs. The specialties of medicine often describe body constituents.

A general model of system design needs to specify the constituent parts of systems in general.

2.5 Holism and Specialization

In general systems theory, any system consists of:

a) Its parts, and

b) Their interactions.

Holism arises when the performance of a system of parts is not defined by reducing it to those parts alone. It applies especially to systems that arise primarily from part interactions. Even simple parts, like air molecules, can interact strongly to form a chaotic system like the weather (Lorenz, 1963). Gestalt psychologists called the concept of the whole being more than the sum of its parts holism, as a curved line is just a curve, but a curved line in a face becomes a “smile”. Holism is how system parts change by interacting with others. Holistic systems are individualistic, because changing one part, by its interactions, can cascade to change the whole system drastically. People rarely look the same because one gene change can change everything. The brain is also holistic — one thought can change everything that you know.

Yet a system’s parts need not be simple. The body began as one cell, a zygote, that divided into all the cells of the body, including liver, skin, bone and brain cells. Note that deciphering the human genome gave the pieces of the genetic puzzle, not how they connect, just as getting all the pieces of a jigsaw puzzle is not the same as putting them together. Likewise, in early societies most people did most things, but today we have millions of specialist jobs. A system’s specialization is the degree to which its constituent parts differ in form and action.

Holism (complex interactions) and specialization (complex parts) are the hallmarks of evolved systems. The former gives the levels of the last chapter and the latter gives the system part specializations discussed now.

2.4 Non-functional Requirements

In traditional software engineering, criteria like usability are said to be“quality” requirements, that affect functional goals but cannot stand alone (Chung et al., 1999). For decades, these non-functional requirements (NFRs), or “-ilities”, were considered second class requirements. They defied categorization, except to be non-functional. How exactly they differed from functional goals was never made clear (Rosa et al., 2001), yet most modern systems have more lines of interface, error and network code than functional code, and increasingly fail for “unexpected” non-functional reasons (Cysneiros & Leite, 2002, p. 699).

The logic is that because NFRs such as reliability cannot exist without functionality, they are subordinate to it. Yet by the same logic, functionality is subordinate to reliability as it cannot exist without it, e.g. an unreliable car that will not start has no speed function, nor does a car that is stolen (low security), nor one that is too hard to drive (low usability).

NFRs not only modify performance but define it. In nature, functionality is not the only key to success, e.g. viruses with no ability to reproduce in themselves simply hijack this function from other cells. Functionality differs from the other system requirements only in being more obvious to us. It is really just one of many requirements. The distinction between functional and non-functional requirements is a bias, like seeing the sun going round the earth because we live on the earth. There is no difference at all between functional and non-functional requirements – they are all just requirements.

2.3 What is a Design Space?

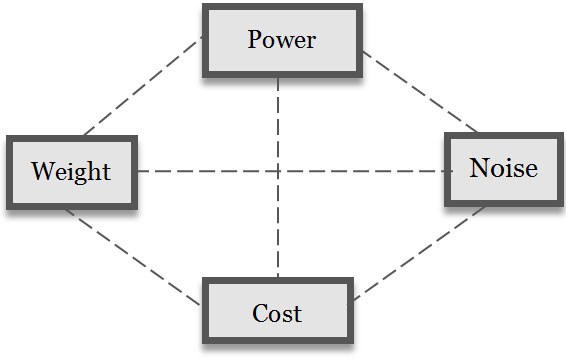

A design space is a combination of requirement dimensions. Architect Christopher Alexander observed that vacuum cleaners with powerful engines and more suction were also heavier, noisier and cost more (Alexander, 1964). One performance criterion has a best point, but two criteria, like power and cost, give a best line. The efficient frontier of two performance criteria is a line, of the maximae of one for each value of the other (Keeney & Raiffa, 1976). System designers must choose a point in a design space with many “best” points, e.g. a cheap, heavy but powerful vacuum cleaner, or a light, expensive and powerful one (Figure 2.1). The efficient frontier is a set of “best” point trade-offs in a design space, given that not all criterion combinations may be achievable, e.g. a cheap and powerful vacuum cleaner may be impossible. Advanced system performance is not a one–dimensional ladder to excellence but a station with many trains serving many destinations.

To see other examples we can look at nature. Successful life includes flexible viruses, reliable plants, social insects and powerful tigers, with the latter the endangered species. There is no “best” animal because in nature performance is multi-dimensional, and a multi-dimensional design space gives many best points. In evolution, not just the strong are fit, and too much of a good thing can be fatal, as over specialization can lead to extinction.

Likewise, computing has no “best”. If computer performance was just more processing we would all want supercomputers, but many prefer laptops with less power over desktops with more (David et al., 2003). On the information level, blindly adding software functions gives bloatware, also called featuritis or scope creep. The result is applications with many features no-one needs.

Design is the art of reconciling many requirements in a particular system form, e.g. a quiet and powerful vacuum cleaner. It is the innovative synthesis of a system in a design requirements space (Alexander, 1964). The system then performs according to its requirement criteria.

Most design spaces are not one dimensional, e.g. Berners-Lee chose HTML for the World Wide Web for its flexibility (across platforms), reliability and usability (easy to learn). An academic conference rejected his WWW proposal because HTML was inferior to SGML (Standard Generalized Markup Language). Like the pundits and the elephant, academic specialists saw only their specialty criterion, not system as a whole. Even after the World Wide Web’s phenomenal success, the blindness of specialists to a general system view remained:

“Despite the Web’s rise, the SGML community was still criticising HTML as an inferior subset … of SGML” Berners-Lee 2000, p96

What has changed since academia found the World Wide Web inferior? Not a lot. If it is any consolation, an equally myopic Microsoft also found Berners-Lee’s proposal unprofitable, from a business perspective. In the light of the benefits both now freely take from the web, academia and business should re-evaluate their myopic criteria.