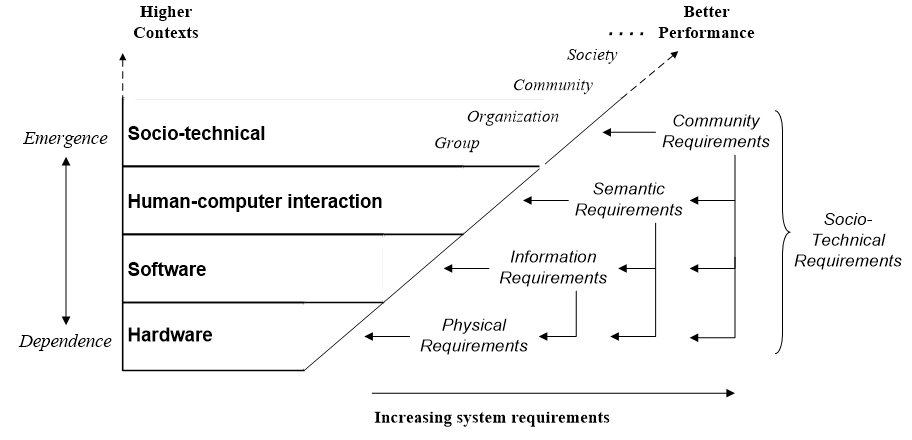

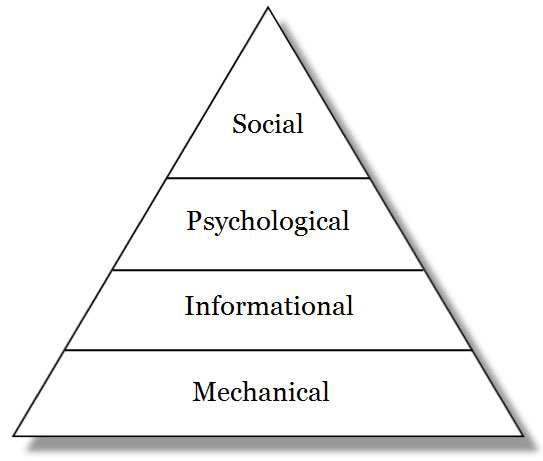

The aim of system design is to find problems early, e.g. a misplaced wall on an architect’s plan can be moved by the stroke of a pen, but once the wall is built, changing it is not so easy. Yet to design a thing, its performance requirements must be known. Doing this is the job of requirements engineering, which analyzes stakeholder needs to specify what a system must do in order that the stakeholders will sign off on the final product. It is basic to system design:

“The primary measure of success of a software system is the degree to which it meets the purpose for which it was intended. Broadly speaking, software systems requirements engineering (RE) is the process of discovering that purpose...”Nuseibeh & Easterbrook, 2000: p. 1

A requirement can be a particular value (e.g. uses SSL), a range of values (e.g. less than $100), or a criterion scale (e.g. is secure). Given a system’s requirements designers can build it, but the computing literature cannot agree on what the requirements are. One text has usability, maintainability, security and reliability (Sommerville, 2004, p. 24) but the ISO 9126-1 quality model has functionality, usability, reliability, efficiency, maintainability and portability (Losavio et al., 2004).

Berners-Lee made scalability a World Wide Web criterion (Berners-Lee, 2000) but others advocate open standards between systems (Gargaro et al., 1993). Business prefers cost, quality, reliability, responsiveness and conformance to standards (Alter, 1999), but software architects like portability, modifiability and extendibility (de Simone & Kazman, 1995). Others espouse flexibility (Knoll & Jarvenpaa, 1994) or privacy (Regan, 1995). On the issue of what computer systems need to succeed, the literature is at best confused, giving what developers call the requirements mess (Lindquist, 2005). It has ruined many a software project. It is the problem that agile methods address in practice and that this chapter now addresses in theory.

In current theories, each specialty sees only itself. Security specialists see security as availability, confidentiality and integrity (OECD, 1996), so to them, reliability is part of security. Reliability specialists see dependability as reliability, safety, security and availability (Laprie & Costes, 1982), so to them security is part of a general reliability concept. Yet both cannot be true. Similarly, a usability review finds functionality and error tolerance part of usability (Gediga et al., 1999) while a flexibility review finds scalability, robustness and connectivity to be aspects of flexibility (Knoll & Jarvenpaa, 1994). Academic specialties usually expand to fill the available theory space, but some specialties recognize their limits:

“The face of security is changing. In the past, systems were often grouped into two broad categories: those that placed security above all other requirements, and those for which security was not a significant concern. But … pressures … have forced even the builders of the most security-critical systems to consider security as only one of the many goals that they must achieve.” Kienzle & Wulf, 1998: p5

The obvious conclusion is that analyzing performance goals in isolation is now giving diminishing returns.

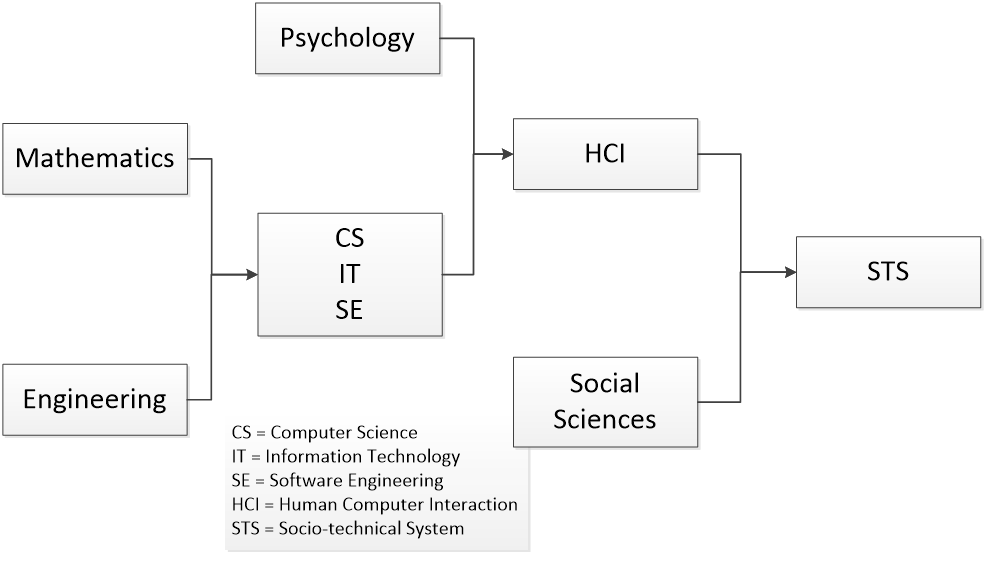

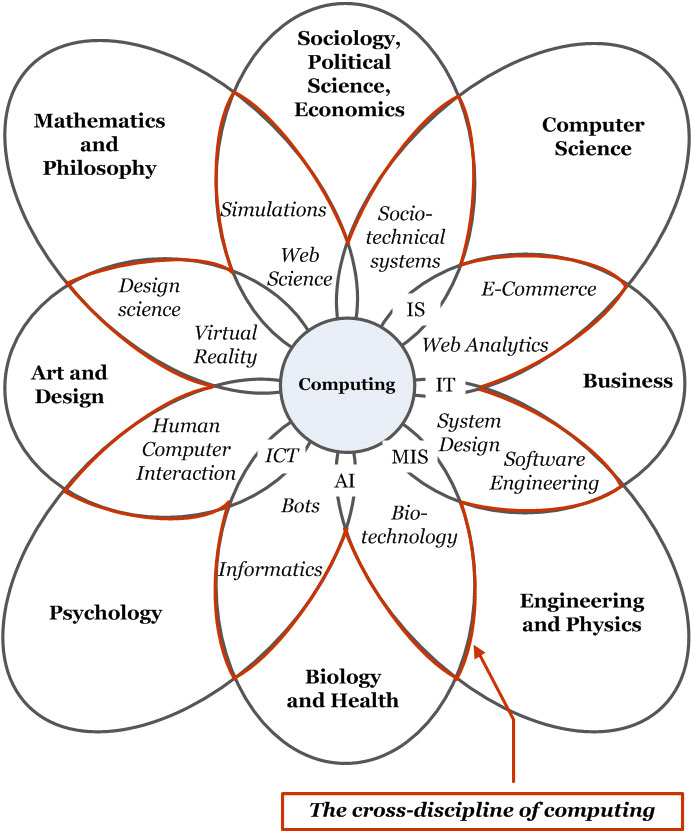

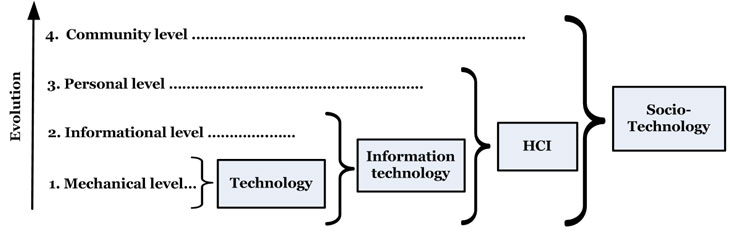

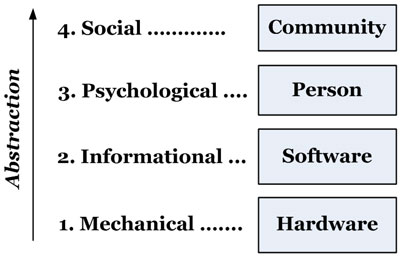

Even as HCI develops into a traditional academic discipline, computing has already moved on to add sociology to its list of paramours.

Even as HCI develops into a traditional academic discipline, computing has already moved on to add sociology to its list of paramours.