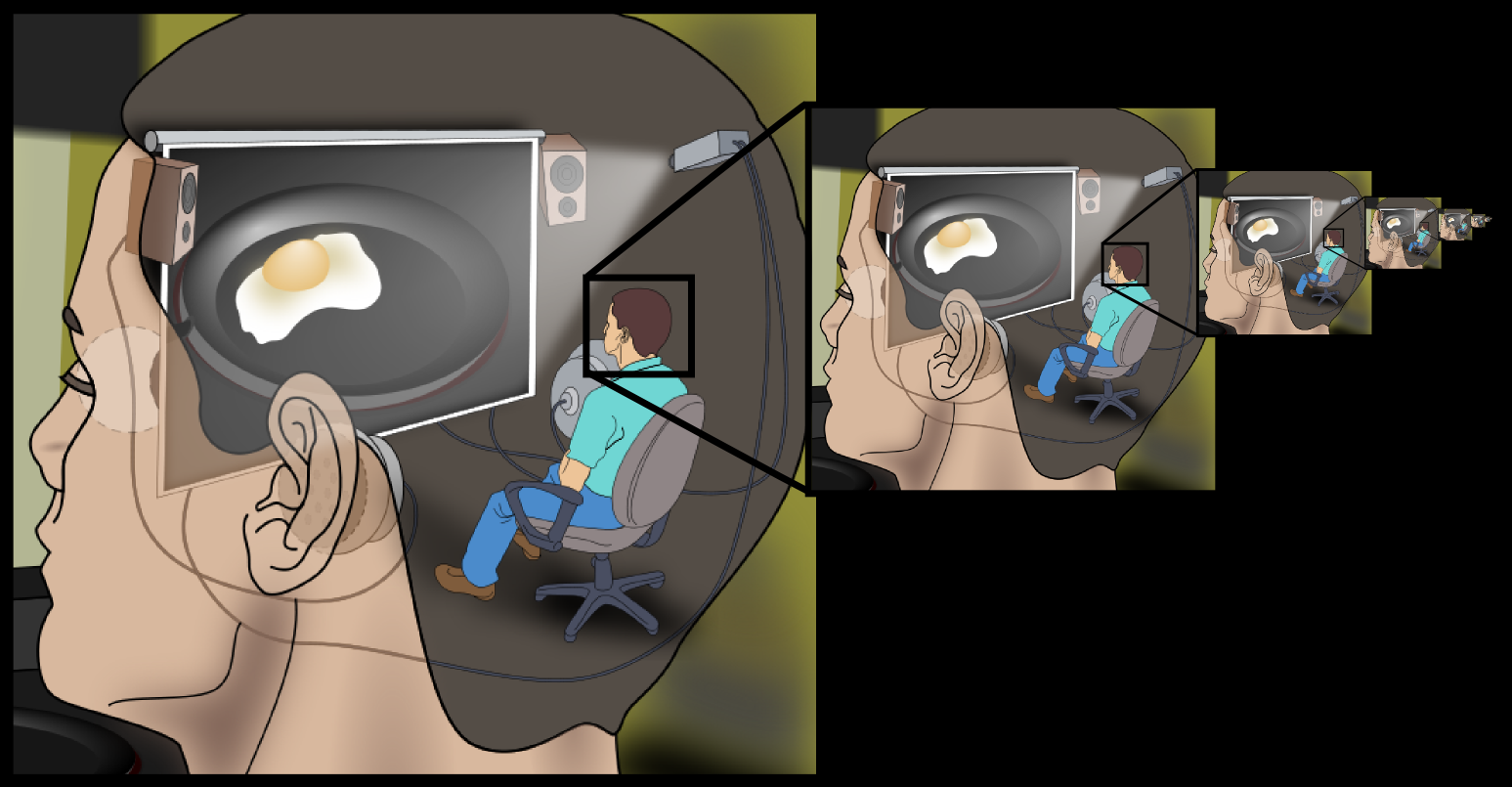

Different brain areas analyze sight, sound, and smell data that other areas use in thoughts, feelings, and actions but how does all this activity bind together in one experience? Descartes explanation was that all sense data clears through the pituitary gland, that passes it to the mind, which is like a little man in the brain watching a movie. Yet by that logic, that little man would need another little man inside his head to also observe, and so on, in an infinite regress (Dennett, 1991) (Figure 6.34). That there is a little man in the brain is illogical, but physical realism isn’t much better, as it concludes that each neuron in the brain:

“… doesn’t ‘know’ it is creating you in the process, but there you are, emerging from its frantic activity almost magically.” (Hofstadter & Dennett, 1981) p352.

That nerves that can’t observe magically act and “there you are” is weaker than dualism. The mind-body problem of centuries ago lives on in neuroscience today as the binding problem:

“One of the most famous continuing questions in computational neuroscience is called ‘The Binding Problem’. In its most general form, ‘The Binding Problem’ concerns how items that are encoded by distinct brain circuits can be combined for perception, decision, and action.” (Feldman, 2013) p1.

The binding problem arises because distant processing hierarchies can’t just exchange data. They can’t “talk”, as global workspace theory claims (6.1.6), because when a visual cortex nerve fires to register a line, it doesn’t say “I saw a line” like a little person. It just fires a yes-no response like any other neuron. To bind that response to another feature like redness needs higher processing in the same hierarchy. At each step in the hierarchy, a nerve can fire to trigger a motor response, but it isn’t an experience because the nerve doesn’t know why it fired. The six-layered visual cortex can process lines, shapes, colors, and textures but the last nerve to fire in a sequence knows no more than the first. To integrate vision and smell needs a higher area to process both outputs but according to brain studies, this doesn’t happen.

Different areas evolved to process sight, smell, sound, thoughts, feelings, touch, and memory but no area evolved to integrate them all. If it had, the brain would be wired like a computer motherboard, with many lines to a central processor, but it isn’t. Each brain area is encapsulated, so smell, sight and sound brain areas can’t exchange any experiences they have with each other:

“Because of the principle of encapsulation, conscious contents cannot influence each other either at the same time nor across time, which counters the everyday notion that one conscious thought can lead to another conscious thought … content generators cannot communicate the content they generate to another content generator. For example, the generator charged with generating the color orange cannot communicate ‘orange’ to any other content generator because only this generator (a perceptual module) can, in a sense, understand and instantiate ‘orange’.” (Morsella et al., 2016) p12.

And even if higher processing tried to integrate all brain areas, it would be too slow, just as complex thought usually comes up with a witty retort after a conversation is over. Our brain can integrate perceptions with memory to drive motor acts in less than a second but if one hierarchy did this, it would take much longer. The binding problem is that brain activities combine in a way that its wiring doesn’t support, so our unified experience of senses, feelings, thoughts, and actions should be impossible.

Encapsulation predicts that the hemispheres can’t exchange data, so each only sees half the visual field. Yet cutting the nerves between the hemispheres doesn’t give a sense of loss:

“… despite the dramatic effects of callosotomy, W.J. and other patients never reported feeling anything less than whole. As Gazzaniga wrote many times: the hemispheres didn’t miss each other.” (Wolman, 2012).

Why don’t split-brain patients know that the corpus callosum is cut? If the optic nerve is cut, we know we are blind, as no data comes from the eyes. If an injury cuts the spinal cord, we know we are paralyzed, as no data comes from the legs. But when the millions of nerves joining the hemispheres are cut, both carry on as before! Why doesn’t the verbal hemisphere report a loss of data? If it normally sees the entire field using the other hemisphere, it should report being half blind, but it doesn’t. It follows that it doesn’t report any missing data because there is none.

Instead of data loss, dividing the hemispheres just divides consciousness. One patient couldn’t smoke because when the right hand put a lit cigarette in his mouth, the left hand removed it, and another found her left hand slapping her awake if she overslept (Dimond, 1980) p434. Conflicts made simple tasks take longer – one patient found his left hand unbuttoning a shirt as the right tried to button it. Another found that when shopping, one hand put back on the shelf items the other had put in the basket. One patient struggled to walk home as one half of his body tried to visit his ex-wife while the other wanted to walk home. These extraordinary but well documented cases show that cutting the corpus callosum gives two hemispheres with different experiences and opinions about what the body should do.

If the left hemisphere only analyzes data from the left visual field, our experience of a single visual field must arise in some other way. It is now proposed that the hemispheres synchronize their electromagnetic fields into one consciousness by means of the eight million nerves linking them (Pockett, 2017). The answer to the binding problem is then that consciousness causes integration not the reverse, where consciousness is the ability to integrate information to yield adaptive action (Morsella, 2005).