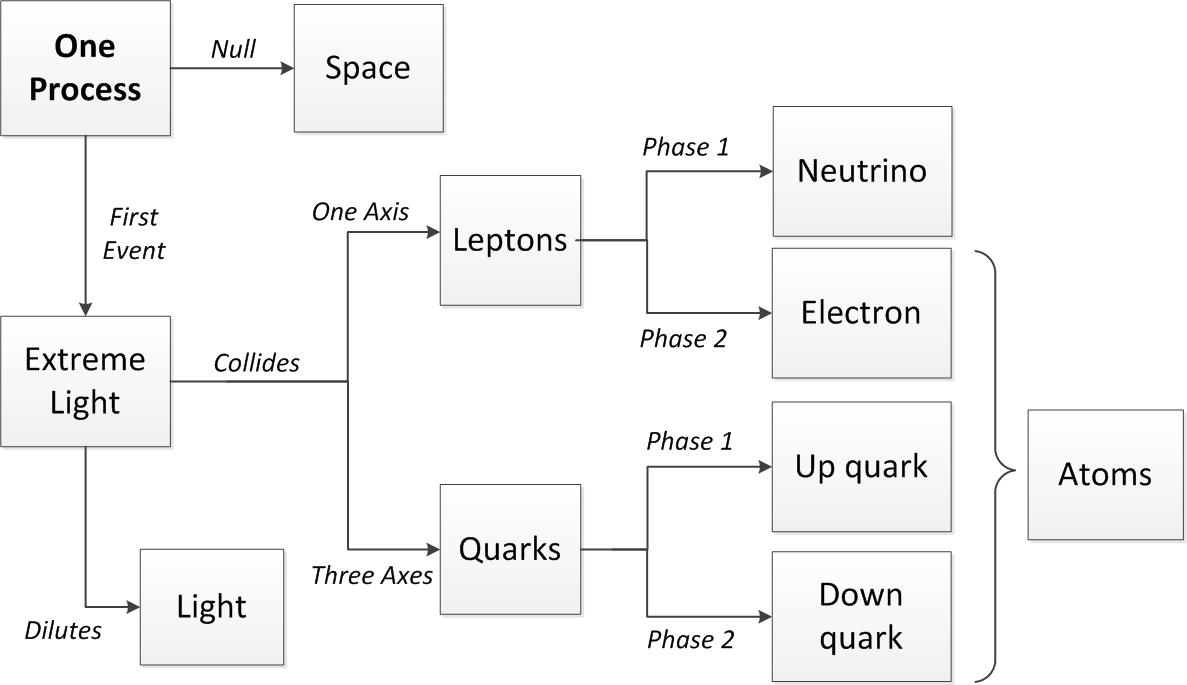

Science tests theories by comparing their predictions with what actually happens. In this case, processing predicts that light at the highest frequency will collide to create matter, while the standard view is that light never collides:

“Two photons cannot ever collide. In fact light is quantized only when interacting with matter.” Wikipedia, 2019.

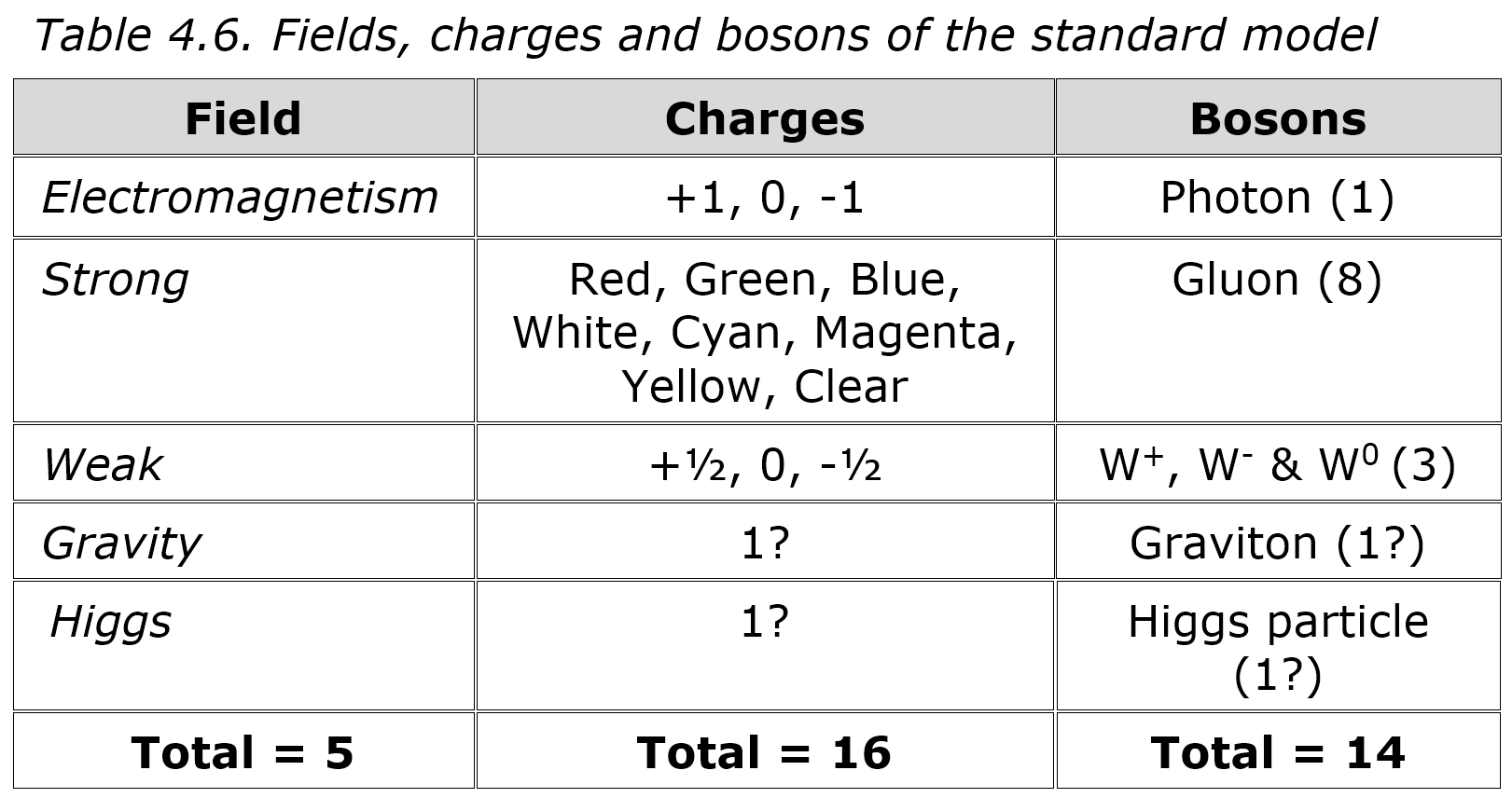

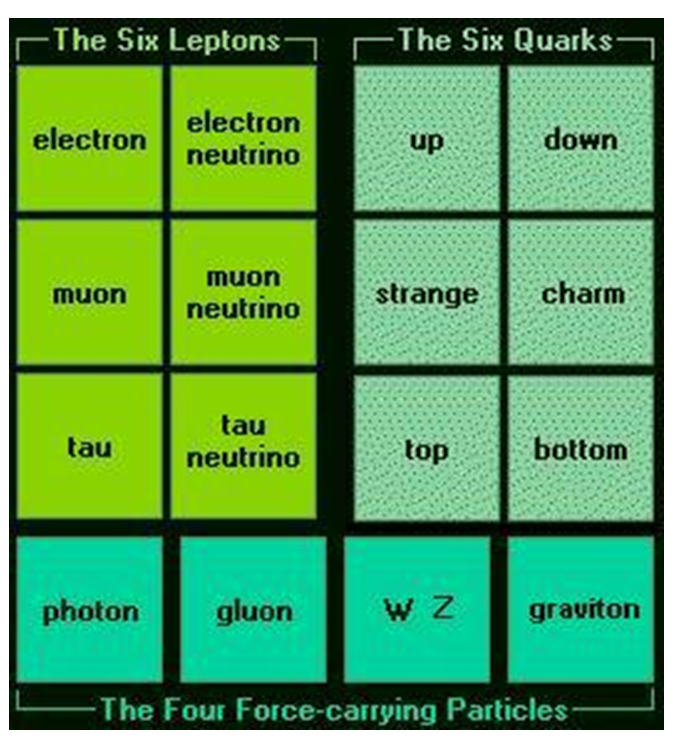

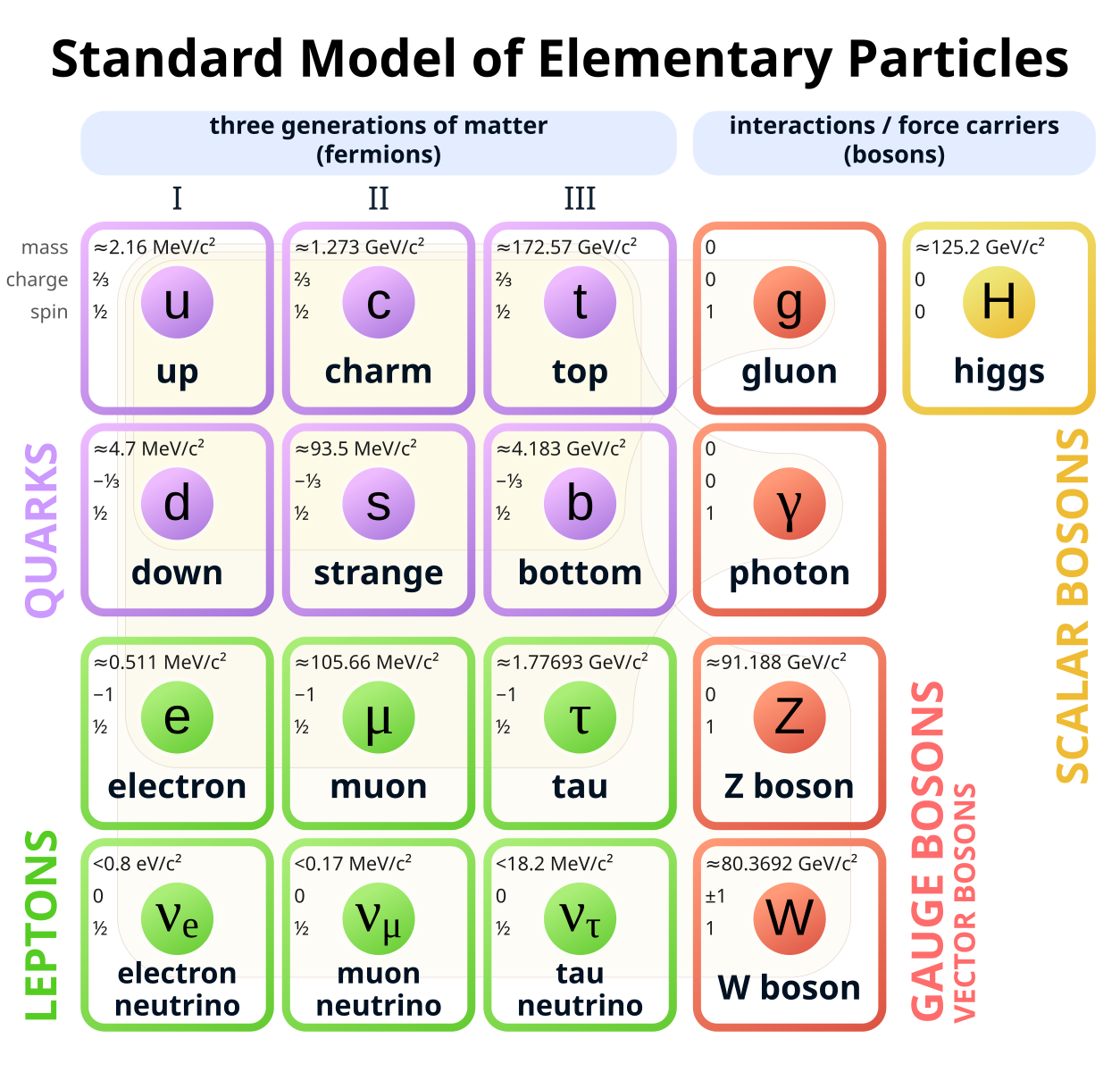

The standard model doesn’t let light collide because photons are bosons that share quantum states but if photons are the core network process, at a two-point wavelength they will overload it and so collide. The evidence supporting the theory that light can collide to produce matter includes:

1. Confined photons have mass. A free photon is massless but in a hypothetical 100% reflecting mirror box, it has mass because as the box accelerates, unequal photon pressure on its walls creates inertia (van der Mark & t’Hooft, 2011). By this logic, photons confined at a point, in a standing quantum wave, will have mass.

2. Einstein’s equation. Einstein’s equation works both ways, so if mass can turn into energy in nuclear bombs, photon energy can become mass, as the Breit-Wheeler process allows.

3. Particle accelerator collisions create matter. Protons that collide and stay intact produce new matter that didn’t exist before based on the collision energy, so high-energy photons could do the same.

4. Pair production. High-frequency light near a nucleus gives electrons and positrons that annihilate back into space.

5. Light collides. When high-energy photons at the Stanford Linear Accelerator hit an electron beam to accelerate it at almost the speed of light, some electrons knocked a photon back with enough energy to hit the photon behind it, giving matter pairs that a magnetic field pulled apart to detect (Burke et al., 1997).

That extreme light colliding in a vacuum can become matter is a plausible prediction that experiments can test. If it is then found that light can become matter, the boson-fermion divide of the standard model falls because bosons can turn into fermions.

Physicists expected accelerator collisions to unlock the secrets of our universe, but they didn’t. They found transient flashes not permanent particles. In nature, what doesn’t survive isn’t the future, so these ephemera are evolutionary dead-ends that led nowhere because they weren’t stable. If matter evolved from light, the future of physics lies in colliding light using light colliders, not matter colliders.

That matter causes everything is just a theory and scientists who don’t question their theories are priests. Light is simpler than matter, and that matter came from high-frequency light is a reasonable theory that can be tested, so let the facts decide whether it is right or wrong.

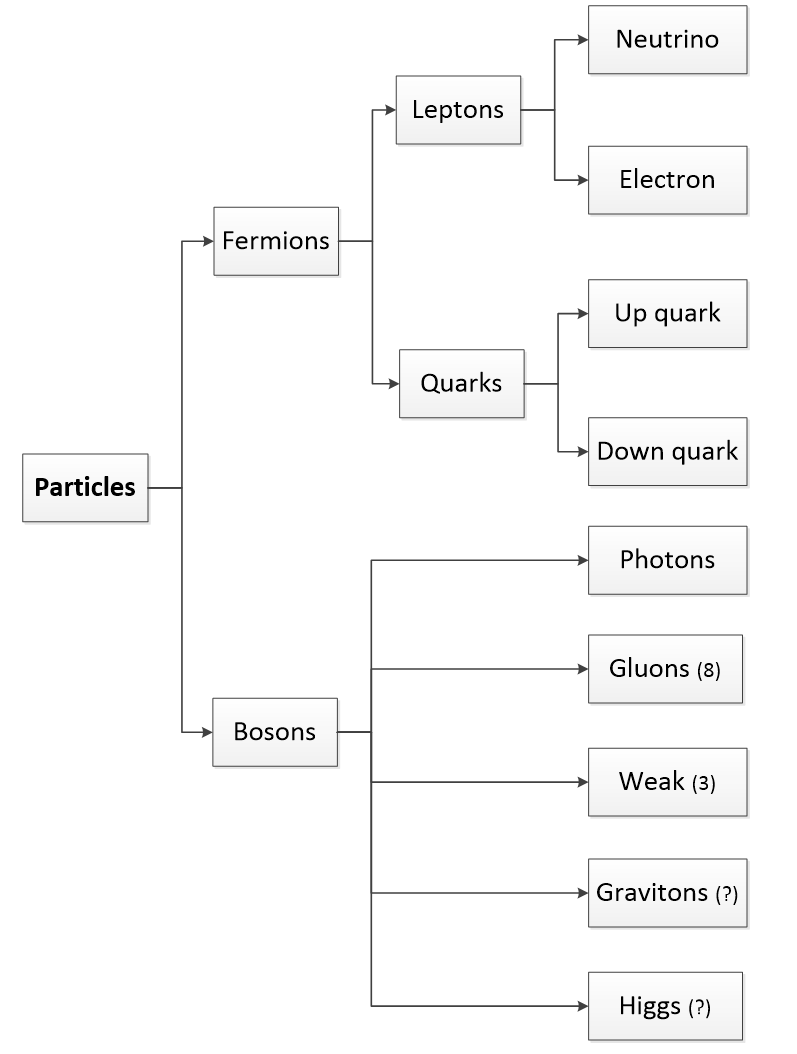

A matter substance should break down into basic bi

A matter substance should break down into basic bi